Our Army

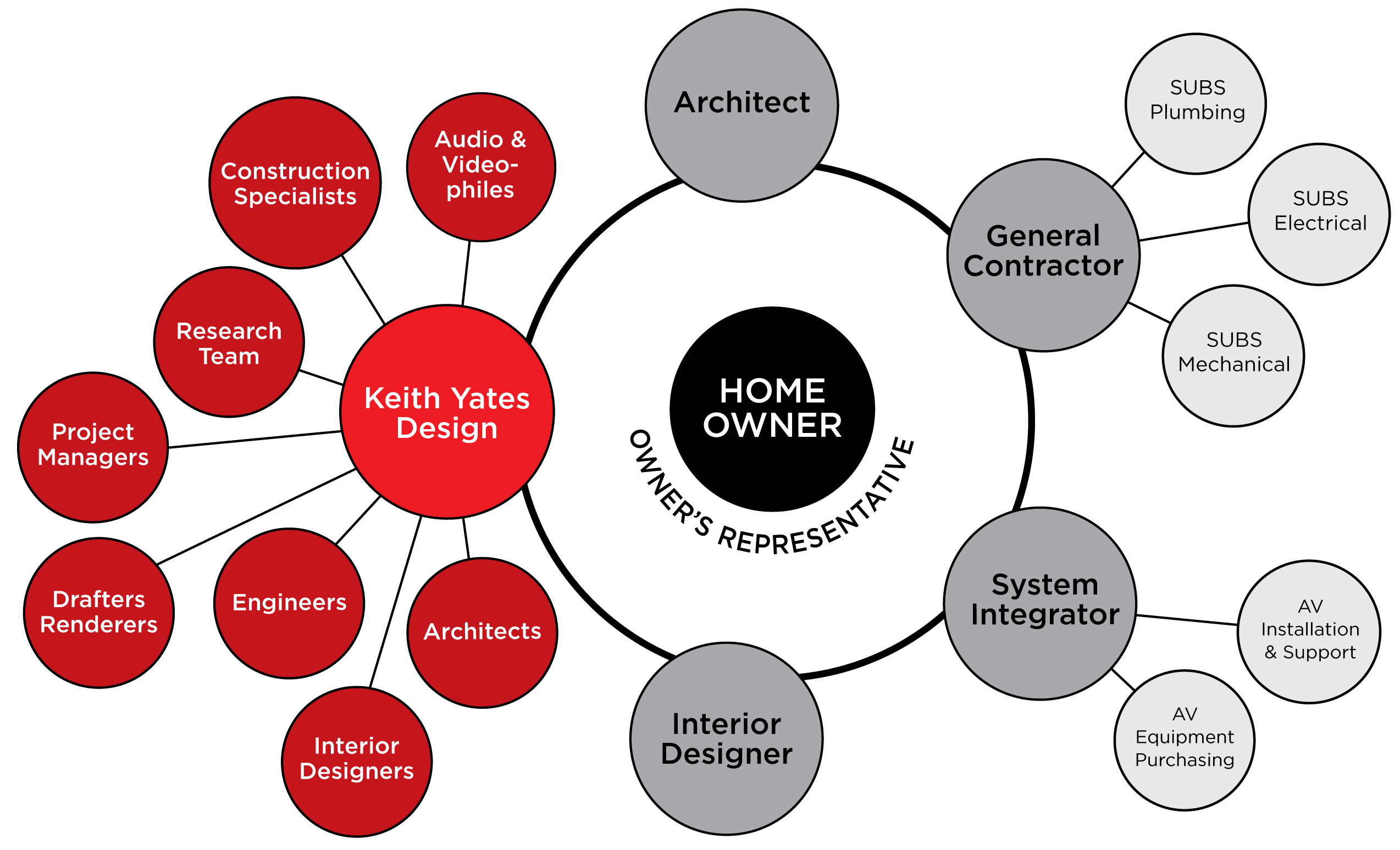

KYD is a team of architects, engineers, researchers, drafters, interior designers, project managers, construction specialists, and dyed-in-the-wool audio/videophiles. Our tangible product is a set of comprehensive BIM-based drawings and specifications, followed by bound engineering reports summarizing the results of our simulations and on-site tests. The permits are pulled by your architect. The room is built by your general contractor. The building services (mechanical, electrical, plumbing) are installed by your GC’s subcontractors. The A/V and control components are vended, installed, programmed and serviced by your systems integrator.

It’s a team effort with a lot of moving parts. With projects all over the world, we know that the most important factor influencing successful outcomes is the clarity of our documentation driven by a science-based and acoustically modeled approach.

Company Founder and President Keith Yates began his career in audio/video career in 1972, working for several specialty retailers in Northern California and the San Francisco Bay Area. After managing a high-end audio salon in Carmel, California in the late 1970s, he launched Keith Yates Audio Video in Sacramento in January 1981 and over the next 10 years built it into one of the nation’s premier high-end A/V retail and custom installation operations.

Although he greatly enjoyed educating clients on the merits of equipment and its installation, it became clear to him that even the best equipment could sound mediocre if the acoustics and design of the space were wrong. Recognizing that the only way to allow the gear to perform optimally and produce superior sound was to design rooms from the ground up, in 1991 he founded Keith Yates Design Group in Penryn, a small town in the nearby Sierra foothills.

As he developed and tested his theories, his research in the areas of residential sized room acoustics for music and film reproduction began to receive recognition. Following a series of acoustic experiments published in the late 1980s and 1990s, Keith became recognized as an expert in creating indoor spaces that deepened and enhanced the audio experience for high-profile and discerning clients. His research articles, reference books, and other writings can be found in the resources section of this website.

KYD designs comprehensive venues—principally home theaters, media rooms, precision listening rooms and concert/recital halls—for private clients worldwide. Yates is also active as an author, teacher, seminar leader, industry panelist and consultant on the topics of acoustics, psychoacoustics, loudspeaker design, and new multimodal (auditory/visual/haptic) strategies for achieving what Coleridge called the “willing suspension of disbelief” or what Yates has termed Deep Entertainment™.

You’d be right to ask why we lavish so much attention on the subtleties.

Experience says: Because that’s where the goosebumps are.

Our Team

We're comprised of a talented team of seasoned pros behind every project.

Recognition

Yates has been featured or profiled in journals as diverse as Rolling Stone, GQ, Archi-Tech, H.A. Pro, The Business Journal, MacWorld, MacWorld Japan, California Executive and This Week in Consumer Electronics. He has also been the subject of a radio feature distributed worldwide by the Associated Press, and a segment on the Home and Garden cable television channel.

- Rolling Stone

- GQ

- Archi-Tech

- H.A. Pro

- The Business Journal

- MacWorld

- MacWorld Japan

- California Executive

- This Week in Consumer Electronics

- Home & Garden Cannel

Publications

Knowledge Base

- 3

- A

- B

- C

- D

- E

- F

- G

- H

- I

- J

- K

- L

- M

- N

- O

- P

- Q

- R

- S

- T

- V

- W

Audio and A/V Manufacturers

Accuphase http://www.accuphase.com/

Aerial Acoustics http://www.aerialacoustics.com/

Ambisonic Technologies http://ambisonicst.com/

ATC http://www.atcloudspeakers.co.uk/

Barco https://www.barco.com/en/

B&W Loudspeakers http://www.bwspeakers.com

Bag End http://www.bagend.com

Bryston http://www.bryston.ca

BSS Audio http://bssaudio.com/en-US

Christie Digital www.christiedigital.com

Cineak http://www.cineak.com/

Classe Audio http://www.classeaudio.com

Crest http://www.peaveycommercialaudio.com/

Crestron http://www.crestron.com/

Crown www.crownaudio.com

Danley Sound Lab http://www.danleysoundlabs.com/

Datasat http://www.datasatdigital.com/

Digital Projection http://www.digitalprojection.com

Draper http://www.draperinc.com

Dynaudio http://www.dynaudio.com

Genelec http://www.genelec.com

JL Audio http://www.jlaudio.com/

Focal http://www.focal.com/usa/en/

JBL Synthesis http://www.harmanluxuryaudio.com/

JBL Professional http://www.jblpro.com/

Kaleidescape http://www.kaleidescape.com/

KEF http://www.kef.com/html/us/

Lake/Lab Gruppen http://labgruppen.com/

Lexicon http://www.harmanluxuryaudio.com/

Magico http://www.magico.net/

Mark Levinson http://www.harmanluxuryaudio.com/

McIntosh http://www.mcintoshlabs.com

Meridian http://www.meridian-audio.com

Meyer Sound http://www.meyersound.com/

Oppo http://www.oppodigital.com/

Paradigm http://www.paradigm.com/

Powersoft Audio http://www.powersoft-audio.com/en/

Pro Audio Technology http://proaudiotechnology.com/

Procella http://procellaspeakers.com/

PSB http://www.psbspeakers.com/

Revel http://www.harmanluxuryaudio.com/

Rockport http://rockporttechnologies.com/

Runco http://www.runco.com

Savant https://www.savant.com/

Seymour Screen Excellence http://www.seymourscreenexcellence.com/

SIM 2 http://www.sim2.com/

Sonance http://www.sonance.com/

Sonus Faber http://www.sonusfaber.com/en-us

Sony http://store.sony.com/home-theater-projectors/cat-27-catid-all-tv-home-theater-projectors

Stewart Filmscreen http://www.stewartfilm.com

Triad Speakers http://www.triadspeakers.com

Trinnov http://www.trinnov.com/

Velodyne http://www.velodyne.com

Acoustical Materials Manufacturers

Acoustic Sciences Corp. (ASC) http://www.tubetrap.com

Acoustic Innovations http://www.acousticinnovations.com

Auralex http://www.auralex.com

Echobusters http://www.echobusters.com

Illbruck-Sonex http://www.pinta-elements.com/en/home/products/acoustic-wall-panels.html

Kinetics Noise Control http://www.kineticsnoise.com

RealAcoustix http://www.realacoustix.com

RPG Diffusor Systems http://www.rpginc.com

Vicoustic http://vicousticna.com

Acoustic Test & Calibration Equipment

ACO Pacific http://www.industry.net/aco.pacific

Bruel & Kjaer http://www.bk.dk

Goldline http://www.gold-line.com

DRA Labs http://www.mlssa.com

TEF http://www.gold-line.com/tef/tef.htm

Research and Professional Organizations

Acoustical Society of America (ASA) http://asa.aip.org

Audio Engineering Society (AES) http://www.aes.org

Custom Electronic Design & Installation Association (CEDIA) http://www.cedia.org

The Media Lab at MIT http://www.media.mit.edu

Stanford University Center for Computer Research in Music and Acoustics (CCRMA, pronounced “karma”) http://ccrma-www.stanford.edu/

University of California at Berkeley Center for New Music and Audio Technologies (CNMAT) http://www.cnmat.berkeley.edu

Darmstadt Auditory Research Group (Germany) http://www.th-darmstadt.de/fb/bio/agl/welcome.htm#Index

Imaging Science Foundation http://www.imagingscience.com

Institute of Acoustics (UK) http://ioa.essex.ac.uk/ioa/index.html

University of York Music Technology Group (England) http://www.york.ac.uk/inst/mustech/welcome.htm

IRCAM (Paris) http://www.ircam.fr/index-e.html

Physics & Psychophysics of Sound (Australia) http://www.anu.edu.au/ITA/ACAT/drw/PPofM/INDEX.html

Psychophysics Lab (New Zealand) http://www.vuw.ac.nz/~trills/psycho/

The Ear Club at UC-Berkeley http://ear.berkeley.edu/ear_club.html

Other Sites of Interest

Theo Kalomirakis Theaters http://www.tktheaters.com

Lucasfilm THX Division http://www.thx.com

MIT Press http://mitpress.mit.edu

Stereophile http://www.stereophile.com

Syn-Aud-Con http://www.synaudcon.com

TMH Corp. (Tom Holman) http://www.tmhlabs.com

Dolby Labs http://www.dolby.com

Books & Articles

A select bibliography.

ACOUSTICS AND PSYCHOACOUSTICS

Ando, Y. (1998). Architectural Acoustics: Blending Sound Sources, Sound Fields and Listeners. New York: Springer-Verlag. Attempting to fuse art and science, Ando combines subjective and objective factors involved in concert hall design with special attention to a model of the auditory-brain system.

Backus, J. (1969). The Acoustical Foundations of Music. New York: Norton.

Bech, S. (1998). Spatial Aspects of Reproduced Sound in Small Rooms. Journal of the Acoustical Society of America, 103 (1), 434-445. Part of a suite [see following] of important reports on the audibility of individual sound reflections off nearby walls in domestic-sized rooms.

Bech, S. (1995). Timbral Aspects of Reproduced Sound in Small Rooms, I. Journal of the Acoustical Society of America, 97 (3), 1717-1726.

Bech, S. (1996). Timbral Aspects of Reproduced Sound in Small Rooms, II. Journal of the Acoustical Society of America, 99 (6), 3539-3549.

Begault, D.R. (1994). 3-D Sound for Virtual Reality and Multimedia. New York: Academic. Useful, well-presented introduction to psychoacoustics, head-related transfer functions, virtual acoustic reality, etc.

Benade, A. H. (1976). Fundamentals of Musical Acoustics. London: Oxford Univ. Press.

Beranek, L. (1986). Acoustics. Woodbury, NY: Acoustical Society of America. An updated version of the 1954 classic textbook.

Beranek, L. (1996). Concert and Opera Halls: How they Sound. Woodbury NY: Acoustical Society of America. An approachable, well illustrated introduction by an eminent authority.

Blauert, J. (1997). Spatial Hearing: The Psychophysics of Human Sound Localization. Cambridge, MA: MIT Press. The author provides a thorough overview of the psychophysical research on spatial hearing in Europe and the United States prior. A newly updated version of the classic 1983 text on sound localization.

Boff, K. R., L. Kaufman, and J. P. Thomas (1986).Handbook of Perception and Human Performance. Sensory Processes and Perception, Vol. 1. New York: John Wiley & Sons. Various sound parameters are delineated and discussed, including their interpretation by individuals having auditory pathologies. An excellent first source for the definition of sound parameters and inquiry into the complexities of sonic phenomena.

Boff, K. R., and J. E. Lincoln, eds. (1988). Engineering Data Compendium: Human Perception and Performance. Ohio: Armstrong Aerospace Medical Research Laboratory, Wright-Patterson Air Force Base.This three-volume compendium distills information from the research literature about human perception and performance that is of potential value to systems designers. Plans include putting the compendium on CD. A separate user’s guide is also available.

Bryan, Micheal, & Tempest, William. (1972). Does Infrasound Make Drivers ‘Drunk’?. New Scientist, Mar, 584-586.

Case, J. (1966). Sensory Mechanisms. New York: Academic Press.

Cherry, E. C. Some Experiments on the Recognition of Speech with One and with Two Ears. Journal of the Acoustical Society of America, 25 (1953): 975-979. A classic paper on the cocktail-party effect, demonstrating the role of attention in the ability to track one voice from a crowd.

Clynes, M., ed. (1982). Music, Mind, and Brain: The Neuropsychology of Music. New York: Plenum. A collection of papers based on the conference on Physical and Neuropsychological Foundation of Music which was in Ossiach (wherever that is!) in 1980. It covers topics such as the nature of the language of music, how the brain organizes musical experience, perception of sound and rhythm, and how computers can help contribute to a better understanding of musical processes.

Craik, R., & Naylor, G. (1985). Measurement of Reverberation Time via Probability Functions. Journal of Sound and Vibration, 102 (3), 453-454.

Cremer, L., and Muller, H. A. (1982). Principles and Applications of Room Acoustics. London: Applied Science. This two-volume set is a basic technical reference in the field, covering both the physics and psychoacoustics of sound in rooms.

Crowder, R., & Morton, J. (1969). Precategorical Acoustic Storage. Perception and Psychophysics, 5 (6), 365-373.

Davies, J. B. (1978). The Psychology of Music. Stanford, CA: Stanford University Press. A clear account, covering all of the fundamental areas: physics of sound, early psychophysical studies, melody perception, musical aptitude, as well as the basic musical parameters (pitch, loudness, timbre, duration) and their physical correlates. Particularly interesting are Davies’ human perspectives, e.g., “music exists in the ear of the listener, and nowhere else,” and his final chapter on specific musical instrument families and character traits of the individuals who play them.

Deutsch, D., ed. (1982). The Psychology of Music. New York: Academic. A well-known and well-regarded book, covers perception, analysis of timbre, rhythm and tempo, timing, melodic processes, and others.

Deutsch, D. The Tritone Paradox: An Influence of Language on Music Perception. Music Perception, 8 (1991): 335-347. The author presents evidence that individuals not only perceive the same musical intervals between complex tones differently but also that the perception of each individual is related to his or her own customary speech patterns.

Dixon, N. F. (1971). Subliminal Perception. London: McGraw- Hill.

Dowling, W.J., and D. L. Harwood (1986). Music Cognition. San Diego: Academic Press. A general text providing an abundance of information concerning the physical characteristics of musical sound and the processes involved in its perception. Topics covered include basic acoustics, physiology of hearing, music perception (e.g., timbre, consonance/dissonance, etc.), melodic organization, temporal organization, emotion and meaning, and cultural context of musical experience; abundant references to research in each of these areas are provided for further reading.

Egan, M.D. (1988). Architectural Acoustics. New York: McGraw-Hill.One of the more practical, non-intimidating and popular introductory books on the general subject of noise, isolation and room acoustics.

Ford, R.D. (1970). Introduction to Acoustics. Amsterdam: Elsevier.

Forsyth, M. (1989). Buildings for Music: The Architect, the Musician, and the Listener from the Seventeenth Century to the Present Day. Cambridge, MA: MIT Press.Coffee-table-worthy historical perspective on the leading concert venues of the last 400 years.

Gazzaniga, M.S. (1972). One Brain — Two Minds?American Scientist, 60 (3), 311-317.

Gelfand, S. (1998). Hearing, An Introduction to Psychological and Physiological Acoustics. New York: M. Dekker.

Halpern, D., Blake, R., & Hillenbrand, B. (1986). Psychoacoustics of a Chilling Sound. Perception and Psychophysics,39 (2), 77-80.

Helmholtz, H. von Selected Writings of Hermann von Helmholtz, edited by Russell Kahl (1971). Middletown, CT: Wesleyan University Press. Landmark writings of the work of the nineteenth-century scientist into the realm of audiology and sonic phenomena.

Jerger, J. (1963). Modern Developments in Audiology. New York: Academic Press.

Knudsen, V. & Harris, C. (1978): Acoustical Designing in Architecture. Woodbury, NY: Acoustical Society of America. The reprint of the classic 1950 text.

Lewers, T.H., & Anderson, J.S. (1984). Some Acoustical Properties of St Paul’s Cathedral, London. Journal of Sound and Vibration, 92 (2), 285-297.

Lebo, C., & Oliphant, K. (1969). Music as a Source of Acoustic Trauma. Journal of the Audio Engineering Society, 17 (5), 535 – 538.

Moore, B. C. J. (1982). An Introduction to the Psychology of Hearing, 2nd ed. London: Academic.

Olson, H. F. (1967). Music, Physics, and Engineering. New York: Dover.

Oster, Gerald. (1973). Auditory Beats in the Brain.Scientific American, October, 94-102.

Pierce, J. R., & David, E. E. (1958). Man’s World of Sound. New York: Doubleday.

Pierce, J. R. (1983). The Science of Musical Sound. New York: Scientific American Books. Marvelously approachable and beautifully illustrated, a great place to begin an investigation into the physical and psychoacoustical issues of sound.

Plomp, R. (1964). The Ear as a Frequency Analyzer.Journal of the Acoustical Society of America, 36 (9), 1628-1636.

Plomp, R. (1965). Tonal Consonance and Critical Bandwidth. Journal of the Acoustical Society of America,37, 548-560.

Rigden, J. S. (1985). Physics and the Sound of Music. New York: John Wiley.

Roederer, J. G. (1975). Introduction to the Physics and Psychophysics of Music, 2nd ed. New York: Springer. A good introduction to the subject.

Shankland, Robert S. (1973) Acoustics of Greek theatres. Physics Today, Oct, 30-35.

Shepard, R. (1964). Circularity in Judgments of Relative Pitch. Journal of the Acoustical Society of America, 36 (12), 2346-2353. So-called Shepard tones are the aural equivalent of the barber pole-always appearing to move upwards without end.

Slarve, Richard N., & Johnson, Daniel L. (1975) Human Whole-Body Exposure to Infrasound. Aviation, Space, and Environmental Medicine, 46 (4), 428-431.

Stevens, S. S.,& Halowell, D. (1938). Hearing. New York: Wiley.

Stevens, S. S., & Warshofsky, F. (1965). Sound and Hearing. New York: Time-Life.

Taylor, C. A. (1965). The Physics of Musical Sounds. New York: American Elsevier.

Tobias, J. V., ed. (1972). Foundations of Modern Auditory Theory. New York: Academic.

Von Bekesy, G. (1957). Sensations on the Skin Similar to Directional Hearing, Beats and Harmonics of the Ear.Journal of the Acoustical Society of America, 29 (4), 489-501.

Von Bekesy, Georg. (1957). The Ear. Scientific American,197 (2), 66-78.

Wallach, H., E. B. Newman, and M. R. Rosenzweig. The Precedence Effect in Sound Localization. American Journal of Psychology, 57 (1949): 315-336. In a reverberant room, two similar sounds reach a subject’s ears from different directions, with one sound following the other after a short delay; yet the subject fuses them into a single sound and localizes this sound based on the source of the first sound to reach the ears. The authors study this perceptual phenomenon, which they term the “precedence effect,” and which is also referred to as the “Haas effect” or the “law of the first wavefront.”

Zwicker, E. (1957). Critical Bandwidth in Loudness Summation. Journal of the Acoustical Society of America,29 (5), 548- 557.

LISTENING & SOUND COGNITION

Bregman, A. S. (1990). Auditory Scene Analysis. Cambridge, MA: MIT Press.Bregman provides a comprehensive theoretical discussion of the principal factors involved in the perceptual organization of auditory stimuli, especially Gestalt principles of organization in auditory stream segregation.

Clynes, M., ed. (1982). Music, mind, and brain: The Neuropsychology of Music. New York: Plenum.

Cohen, J. (1962). Information Theory and Music.Behavioral Science, 7, 137-163.

Deutsch, D. (1969). Music Recognition. Psychological Review, 76 (3), 300-307.

Deutsch, D., ed. (1982). The Psychology of Music. New York: Academic.

Dixon, N. F. (1971). Subliminal Perception. London: McGraw- Hill.

Handel S. (1989). Listening. MIT Press. This book includes in one source broad and detailed coverage of auditory topics including sound production (especially by musical instruments and by voice), propagation, modelling, and the physiology of the auditory system. It covers parallels between speech and music throughout.

Langer, S. K. (1951). Philosophy in a New Key. New York: Mentor.

Laske, O. E. (1977). Music, Memory and Thought. Ann Arbor: University Microfilms International.

Laske, O. E. (1980). Toward an Explicit Cognitive Theory of Musical Listening. Computer Music Journal, 4 (2), 73-83.

McAdams, S. & E. Bigand, eds. (1993).Thinking in Sound: The Cognitive Psychology of Human Audition, Clarendon Oxford.

McAdams, S. ed. (1987). Music and Psychology: A Mutual Regard, vol. 2 pt. 1, Contemporary Music Review.

McAdams, S., & Bregman, A. (1979). Hearing Musical Streams. Computer Music Journal, 3 (4), 26-43.

McGregor, Graham, White, R.S.(1986). The Art of Listening. London: Croom Helm.

Merriam, A. P. (1964). The Anthropology of Music. Chicago: Northwestern Univ. Press.

Metz, C. (1985). Aural Objects. In E. Weiss & J. Belton, eds. Film Sound, Columbia University Press.

Meyer, L. B. (1956). Emotion and Meaning in Music. Chicago: Univ. of Chicago Press.

Minsky, M. (1981). Music, Mind and Meaning. Computer Music Journal, 5 (3), 28-44.

Moles, A. (1966). Information Theory and Esthetic Perception. Urbana: Univ. of Illinois Press.

Moray, N. (1969). Listening and Attention. Harmondsworth: Penguin.

O’Leary, A., and G. Rhodes. Cross-Modal Effects on Visual and Auditory Object Perception. Perception & Psychophysics, 35 (1984): 565-569. Using a display that combined a stimulus for auditory stream segregation with its visually apparent movement analog, these Stanford University researchers demonstrated cross-modal influences between vision and audition on perceptual organization. Subjects hearing the same auditory sequence perceived it as two tones if a concurrent visual sequence was presented that was perceived as two moving dots, and one tone if a concurrent visual sequence perceived as a single object was presented.

Patterson, B. (1974). Musical Dynamics. Scientific American, 231 (5), 78-95.

Peacock, K. Synesthetic Perception: Alexander Scriabin’s Color Hearing. Music Perception, 2(4) (1985): 483-506. A curious phenomenon which has surfaced repeatedly since the late Baroque era has come to be known as synaesthesia. It was used by the Romanticists of the nineteenth century as an effective means to enrich their accounts of sensuous impressions. Other names for the phenomena include chromesthesia, photothesia, synopsia, color hearing, and color audition. People who have this characteristic experience a crossover between one or more sensory modes. Thus, they might be blessed with the ability to hear colors or odors, or see sounds. People who habitually perceive stimuli in this manner are often surprised when told that not everyone shares this faculty. Color hearing, though only one form of synaesthesia, is probably the commonest.

Seashore, C. E. (1938). The Psychology of Music. New York: McGraw-Hill.

Yates, A. J. (1963). Delayed auditory feedback.Psychological Bulletin, 60, 213-232.

RELATED TOPICS

Ackerman, D. (1990). A Natural History of the Senses. New York: Vintage.

Computer Music Journal. Cambridge, MA: MIT Press.CMJ is the most important source for information on new sound synthesis algorithms, computer music composition techniques, computer-assisted music analysis programs, and a host of other issues.

Dennett, D. C. (1996). Kinds of Minds: Toward an Understanding of Consciousness. New York: Basic.

Gazzaniga, M.S., ed. (1995). The Cognitive Neurosciences. Cambridge, MA: MIT Press. A fascinating, if daunting 1400-page compendium on the mechanisms involved in human sensory and motor systems, memory, thought, emotions, consciousness and brain evolution.

Levenson, T. (1994). Measure for Measure: A Musical History of Science. New York: Simon & Schuster.